I have been super interested in absorbing content around the latest advances in AI Art generators (DALL-E 2, Midjourney, Imagen, Stable Diffusion, ...) for the last few weeks and had great fun playing around with it myself. I find the subject to be fascinating on a number of levels and both the results and discussion around them very engaging. Thoughts and feelings around this technology vary widely from what I have seen while also a lot of people seemingly have not actually taken note of what is happening at all or do not think that it is a big deal. Which I find surprising, as to me it is totally mind blowing how good and accessible this technology has already become and I feel that it has huge implications for the creative industries in the short term, and perhaps even the camera market to a degree.

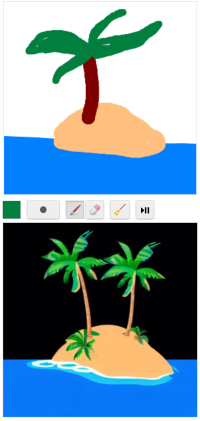

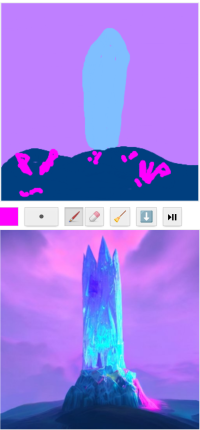

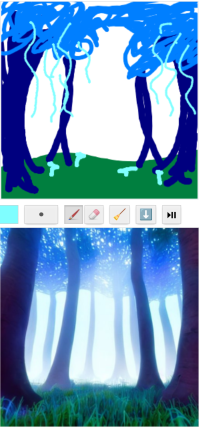

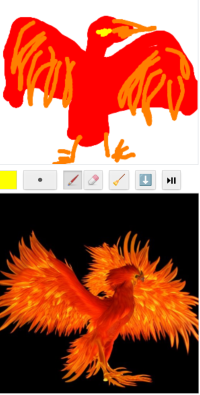

For those who may be unaware what I am talking about: Research into the creation of images through artificial neural network approaches has been going on for a while and produced interesting results in the past for certain niche applications, like creating pictures of realistic human faces (This site will generate a unique human face each time you reload it), or animals, or applying a reference style to a given image. But those appear to mostly exist in the realm of research. But more recently, newer approaches have emerged that are able to generate images of arbitrary subject matter and are easy enough to use to incorporate them into actual workflows. In the simplest form, these tools take only a small text describing the image as input and return a generated image that tries to adhere to the input prompt as closely as possible. Furthermore, these tools allow creating new images from existing one in a variety of interesting ways, like completing a rough sketch into a detailed piece, creating small variations of the input image, or replacing a section in it with something newly generated, like a more advanced version of content aware fill.

So although the most talked about and easily most accessible use for these tools at the moment is the one where only text is put in and an image comes out, the technology actually provides much finer control over composition and details to guide the AI to a desirable result. And although the default behavior of these tools currently provides images that are usually below one megapixel, there are also approaches for intelligent upscaling and stitching of multiple generated images to drastically improve resolution.

As someone who does photography purely as a hobby because I enjoy both the process of creating an image and looking at the result and its esthetic properties, the fun in using these tools comes from exploring the sometimes truly stunning beauty of the results and the possibility to experiment with types of imagery that I could/would not be involved in usually, like portraits of human models, luxury cars, exotic architecture, fantastical landscapes and so on. To include some results that I have got using a local installation of 'Stable Diffusion' with a slightly tweaked version of the relevant python script:

'Beautiful rose princess, standing in a vivid landscape. Photorealism, art by artgerm, detailed, RTX, 35mm 1.4'

This particular AI model is trained on freely available images found across the internet. In particular it is trained on the alternative description texts that are associated with web images to be displayed if the image can not be loaded, or read out for people with visual issues. So adding a variety of words commonly found on the internet to the end of a natural language prompt can help guide the look of the image. A side effect of this training input is that the model has learned that people often put little water marks on images and tries to imitate this behavior in its own creations, as you can see in some of these examples.

I would never go through the process necessary to create such images with a camera. That's just not the kind of photography I enjoy and associated with too much work and expense for a hobby anyway. But each of these images takes just under 20 seconds to be created, and it is quite addictive to get something this visually pleasing for such little effort. It almost becomes like a game, trying to steer the computer into the direction that best suites the idea in ones mind, or just exploring what it comes up with when given room to experiment on its own. Here are some more that I liked quite a lot, some with prompts that I created myself and some just slightly tweaked from the vast gallery of generations found on Lexica:

As I said, I find it mind blowing that technology has reached a point where I can write a little sentence and a program that is completely free and running on my mid range consumer graphics card, is able to translate that text into an image that often accurately captures what was described in the sentence.

On the one hand I am very exited for this technology to become even more accessible by being integrated into photoshop, for example, so that one can actually tell content aware fill what exactly to put into the filled area. Or selecting a section of an image and asking for variations of that image. Or variations of a face, for example fixing a group shot where one person managed to blink or talk at the wrong time.

On the other hand I am curious where the discussion around this will go. Text and speech recognition has gone a long way but somehow it seems to me like this form of human-machine interaction is the first one that makes it tangible just how well state of the art neural networks can 'understand' natural language.

There are however controversies around degrading the value of real digital artists who produce these kind of results through years of experience and practice. And also around the fact that one can imitate famous styles by simple typing the name of a respective artist,

I am also interested in seeing how this will impact the camera market. Who in the mainstream population even needs a low end camera kit anymore if you can do all the documentational photography with your phone and create the instagram style show off pieces with a computer? Will this level of esthetic quality drown out true photography from mainstream social media platforms where even photography is heavily amplified through filters already anyway?

And the implications for misinformation are of course also huge. This model has no restrictions. You can type in any sufficiently famous politician and get a photorealistic image of them doing what ever to wrote in the prompt. But using the medium of photography as evidence has been getting ever more shaky anyway, so I think this just puts the final nail into an already well sealed coffin.

If this is an old hat for everybody reading, so be it. But I just felt like sharing some of this and perhaps find out about some other interesting applications and use cases if somebody here has used these tools and is willing to give some insight into their usage.

For those who may be unaware what I am talking about: Research into the creation of images through artificial neural network approaches has been going on for a while and produced interesting results in the past for certain niche applications, like creating pictures of realistic human faces (This site will generate a unique human face each time you reload it), or animals, or applying a reference style to a given image. But those appear to mostly exist in the realm of research. But more recently, newer approaches have emerged that are able to generate images of arbitrary subject matter and are easy enough to use to incorporate them into actual workflows. In the simplest form, these tools take only a small text describing the image as input and return a generated image that tries to adhere to the input prompt as closely as possible. Furthermore, these tools allow creating new images from existing one in a variety of interesting ways, like completing a rough sketch into a detailed piece, creating small variations of the input image, or replacing a section in it with something newly generated, like a more advanced version of content aware fill.

So although the most talked about and easily most accessible use for these tools at the moment is the one where only text is put in and an image comes out, the technology actually provides much finer control over composition and details to guide the AI to a desirable result. And although the default behavior of these tools currently provides images that are usually below one megapixel, there are also approaches for intelligent upscaling and stitching of multiple generated images to drastically improve resolution.

As someone who does photography purely as a hobby because I enjoy both the process of creating an image and looking at the result and its esthetic properties, the fun in using these tools comes from exploring the sometimes truly stunning beauty of the results and the possibility to experiment with types of imagery that I could/would not be involved in usually, like portraits of human models, luxury cars, exotic architecture, fantastical landscapes and so on. To include some results that I have got using a local installation of 'Stable Diffusion' with a slightly tweaked version of the relevant python script:

'Beautiful rose princess, standing in a vivid landscape. Photorealism, art by artgerm, detailed, RTX, 35mm 1.4'

This particular AI model is trained on freely available images found across the internet. In particular it is trained on the alternative description texts that are associated with web images to be displayed if the image can not be loaded, or read out for people with visual issues. So adding a variety of words commonly found on the internet to the end of a natural language prompt can help guide the look of the image. A side effect of this training input is that the model has learned that people often put little water marks on images and tries to imitate this behavior in its own creations, as you can see in some of these examples.

I would never go through the process necessary to create such images with a camera. That's just not the kind of photography I enjoy and associated with too much work and expense for a hobby anyway. But each of these images takes just under 20 seconds to be created, and it is quite addictive to get something this visually pleasing for such little effort. It almost becomes like a game, trying to steer the computer into the direction that best suites the idea in ones mind, or just exploring what it comes up with when given room to experiment on its own. Here are some more that I liked quite a lot, some with prompts that I created myself and some just slightly tweaked from the vast gallery of generations found on Lexica:

As I said, I find it mind blowing that technology has reached a point where I can write a little sentence and a program that is completely free and running on my mid range consumer graphics card, is able to translate that text into an image that often accurately captures what was described in the sentence.

On the one hand I am very exited for this technology to become even more accessible by being integrated into photoshop, for example, so that one can actually tell content aware fill what exactly to put into the filled area. Or selecting a section of an image and asking for variations of that image. Or variations of a face, for example fixing a group shot where one person managed to blink or talk at the wrong time.

On the other hand I am curious where the discussion around this will go. Text and speech recognition has gone a long way but somehow it seems to me like this form of human-machine interaction is the first one that makes it tangible just how well state of the art neural networks can 'understand' natural language.

There are however controversies around degrading the value of real digital artists who produce these kind of results through years of experience and practice. And also around the fact that one can imitate famous styles by simple typing the name of a respective artist,

I am also interested in seeing how this will impact the camera market. Who in the mainstream population even needs a low end camera kit anymore if you can do all the documentational photography with your phone and create the instagram style show off pieces with a computer? Will this level of esthetic quality drown out true photography from mainstream social media platforms where even photography is heavily amplified through filters already anyway?

And the implications for misinformation are of course also huge. This model has no restrictions. You can type in any sufficiently famous politician and get a photorealistic image of them doing what ever to wrote in the prompt. But using the medium of photography as evidence has been getting ever more shaky anyway, so I think this just puts the final nail into an already well sealed coffin.

If this is an old hat for everybody reading, so be it. But I just felt like sharing some of this and perhaps find out about some other interesting applications and use cases if somebody here has used these tools and is willing to give some insight into their usage.